- #INSTALL APACHE SPARK OVER HADOOP CLUSTER INSTALL#

- #INSTALL APACHE SPARK OVER HADOOP CLUSTER DRIVER#

- #INSTALL APACHE SPARK OVER HADOOP CLUSTER ARCHIVE#

The generated image isn't designed to have a small footprint(Image size is about 1gb). We've created a simpler version of a spark cluster in docker-compose, the main goal of this cluster is to provide you with a local environment to test the distributed nature of your spark apps without making any deploy to a production cluster.

#INSTALL APACHE SPARK OVER HADOOP CLUSTER DRIVER#

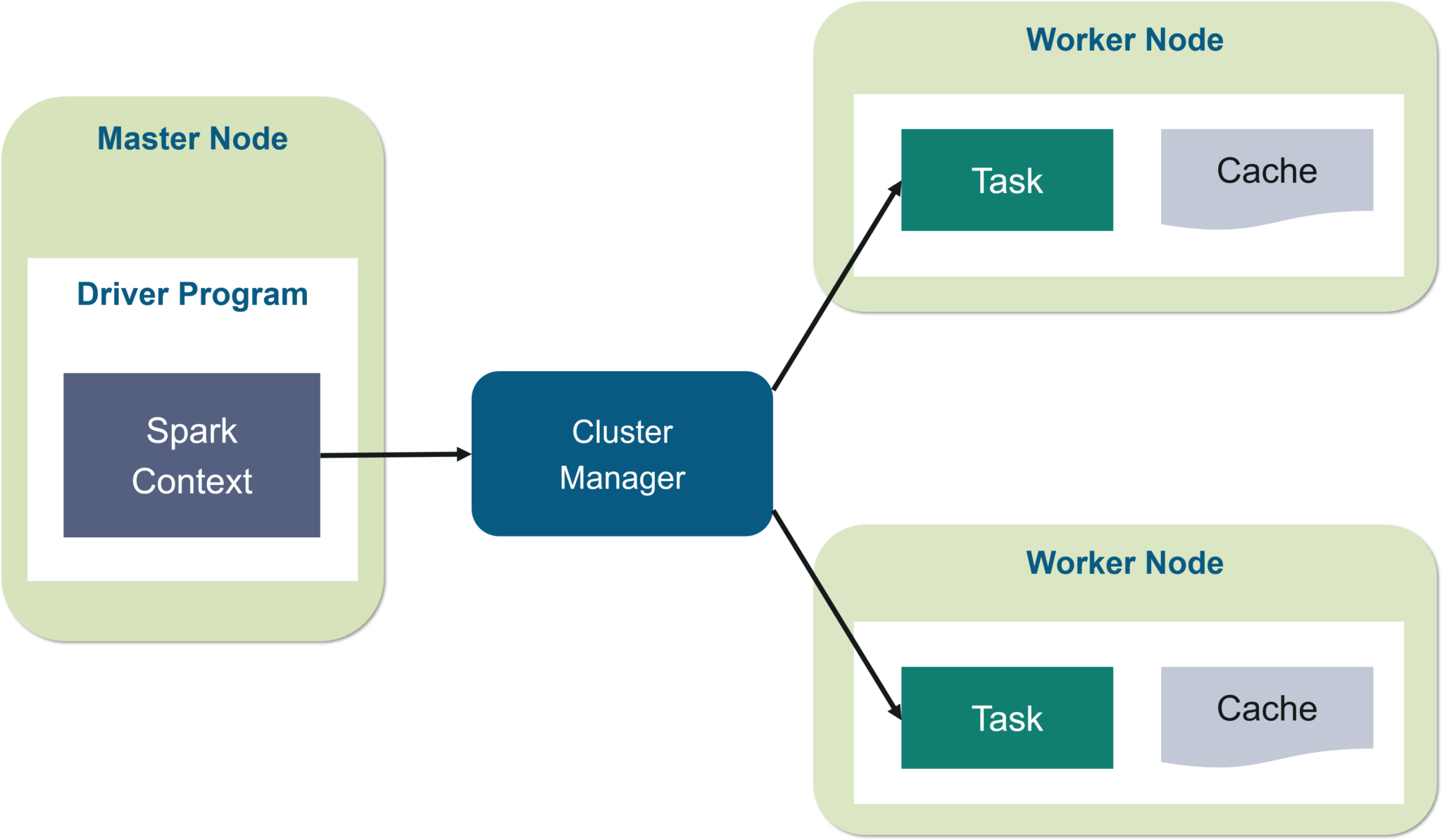

You will notice on the spark-ui a driver program and executor program running(In scala we can use deploy-mode cluster) data:/opt/spark-data demo-database : image : postgres:11.7-alpine ports : - " 5432:5432" environment : - POSTGRES_PASSWORD=casa1234Įnter fullscreen mode Exit fullscreen mode data:/opt/spark-data spark-worker-b : image : cluster-apache-spark:3.0.2 ports : - " 9092:8080" - " 7001:7000" depends_on : - spark-master environment : - SPARK_MASTER=spark://spark-master:7077 - SPARK_WORKER_CORES=1 - SPARK_WORKER_MEMORY=1G - SPARK_DRIVER_MEMORY=1G - SPARK_EXECUTOR_MEMORY=1G - SPARK_WORKLOAD=worker - SPARK_LOCAL_IP=spark-worker-b volumes :. data:/opt/spark-data environment : - SPARK_LOCAL_IP=spark-master - SPARK_WORKLOAD=master spark-worker-a : image : cluster-apache-spark:3.0.2 ports : - " 9091:8080" - " 7000:7000" depends_on : - spark-master environment : - SPARK_MASTER=spark://spark-master:7077 - SPARK_WORKER_CORES=1 - SPARK_WORKER_MEMORY=1G - SPARK_DRIVER_MEMORY=1G - SPARK_EXECUTOR_MEMORY=1G - SPARK_WORKLOAD=worker - SPARK_LOCAL_IP=spark-worker-a volumes :. Ln -sf /dev/stdout $SPARK_WORKER_LOG COPY start-spark.sh / CMD SPARK_WORKER_LOG=/opt/spark/logs/spark-worker.out \ SPARK_MASTER_LOG=/opt/spark/logs/spark-master.out \ # Apache spark environment FROM builder as apache-spark WORKDIR /opt/spark ENV SPARK_MASTER_PORT=7077 \

& tar -xf apache-spark.tgz -C /opt/spark -strip-components =1 \

#INSTALL APACHE SPARK OVER HADOOP CLUSTER ARCHIVE#

# Download and uncompress spark from the apache archive RUN wget -no-verbose -O apache-spark.tgz " $. # Fix the value of PYTHONHASHSEED # Note: this is needed when you use Python 3.3 or greater ENV SPARK_VERSION=3.0.2 \ RUN update-alternatives -install "/usr/bin/python" "python" " $(which python3 ) " 1

#INSTALL APACHE SPARK OVER HADOOP CLUSTER INSTALL#

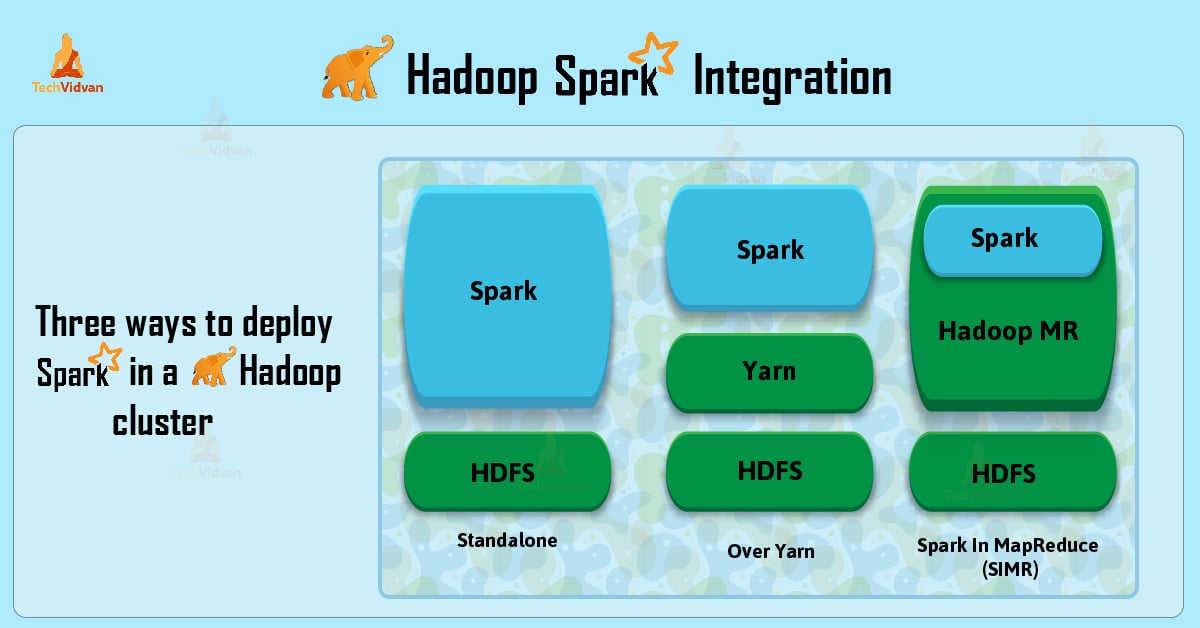

vi ~/.# builder step used to download and configure spark environment FROM openjdk:11.0.11-jre-slim-buster as builder # Add Dependencies for PySpark RUN apt-get update & apt-get install -y curl vim wget software-properties-common ssh net-tools ca-certificates python3 python3-pip python3-numpy python3-matplotlib python3-scipy python3-pandas python3-simpy cd scp -r /usr/local/Īfter that we will set the environment variables in the EdgeNode accordingly. Logon to the EdgeNode & secure copy the Spark directory from the NameNode. Let us configure of EdgeNode or Client Node to access Spark. Also the Spark Application UI is available at. In our case we have setup the NameNode as Spark Master Node. jpsīrowse the Spark Master UI for details about the cluster resources (like CPU, memory), worker nodes, running application, segments, etc. Let us validate the services running in NameNode as well as in the DataNodes. So it’s time to start the SPARK services. Well we are done with the installation & configuration. Repeat the above step for all the other DataNodes. Next we need to update the Environment configuration of Spark in all the DataNodes. cd scp -r spark scp -r spark DataNode2:/usr/local We will secure copy the spark directory with the binaries and configuration files from the NameNode to the DataNodes. Now we have to configure our DataNodes to act as Slave Servers. Open the slaves file & add the datanode hostnames. Next we have to list down DataNodes which will act as the Slave server. cd cp spark-env.sh.template vi spark-env.shĮxport JAVA_HOME=/usr/lib/jvm/java-7-oracle/jreĮxport HADOOP_CONF_DIR=/usr/local/hadoop/etc/hadoop Copy the template file and then open spark-env.sh file and append the lines to the file. Next we need to configure Spark environment script in order to set the Java Home & Hadoop Configuration Directory. Append below lines to the file and source the environment file. We will install Spark under /usr/local/ directory. In the time of writing this article, Spark 2.0.0 is the latest stable version. Select the Hadoop Version Compatible Spark Stable Release from the below link Lets ssh login to our NameNode & start the Spark installation.

We will configure our cluster to host the Spark Master Server in our NameNode & Slaves in our DataNodes.

0 kommentar(er)

0 kommentar(er)